Serverless GraphQL Federation Router for AWS Lambda

We officially released the first version of Cosmo Serverless GraphQL Federation Router for the AWS Lambda Runtime. This marks a huge milestone for us and the GraphQL community. It is the first time that a vendor officially support AWS Lambda to run a GraphQL Federation Gateway. Let's break down what this means for you and your team. Before we dive into the details, let's first talk about what a GraphQL Federated Gateway is.

What is a GraphQL Federation Router / Gateway?

A GraphQL gateway, also known as a GraphQL router, acts as a single access point that aggregates data from multiple sources, orchestrating responses to GraphQL queries. It provides a unified GraphQL schema from different microservices, databases, or APIs, streamlining query processing and schema management. By routing requests to the appropriate services and merging the responses, a GraphQL gateway simplifies client-side querying, enabling seamless data retrieval from a variety of back-end services and systems. This architectural design enhances scalability, maintainability, and the ability to evolve API landscapes without impacting client applications.

In order to scale out a federated Graph, you need more than just a Gateway, though. You should have a Schema Registry, Analytics, Schema Usage Metrics, breaking change detection, and a lot more. For that, we've built Cosmo which is a complete platform to manage your federated Graph. Check out Cosmo on GitHub if you're interested, it's fully open-source.

Why AWS Lambda is a good fit for a GraphQL Federation Router?

AWS Lambda provides a serverless computing service, offering a hassle-free solution where concerns about server provisioning, scaling, and infrastructure management are handled by AWS. This allows you to concentrate solely on your business logic. Although it might sound like a jargon-filled proposition, the practical benefits for companies are substantial. Operating gateway services is inherently complex and challenging, but AWS Lambda simplifies this process. It stands out as the most popular and mature serverless computing service, available in all regions and featuring an exceptionally generous free tier. These attributes make AWS Lambda an ideal choice for our specific needs.

AWS Lambda is Event-driven and requires a small footprint

Due to the architecture of Lambda, we had to make our router event-driven. AWS Lambda functions are invoked by events. You can't simply run a http server. Instead, you have to register a handler function that is invoked by an event. This is a huge difference to traditional servers. Luckily, the community already built a lot of adapters to make this process as easy as possible. We decided to use the akrylysov/algnhsa to handle the event and response handling for us. This allows us to make our router compatible with only a few lines of code "simplified".

As a side effect, our Router can now be integrated into the existing AWS ecosystem. For example, we can run a GraphQL mutation through an SQS or S3 event. This allows us to build a very flexible and scalable system.

That's it! Of course not, there is a lot more going on under the hood. We down-shrink the router binary to 19MB to advance a small footprint and therefore fast cold starts. We also went a different route to report metrics and traces to our collectors. We will talk about this in the next section.

OpenTelemetry support

Our Router has built-in support for OpenTelemetry for tracing and metrics. We also collect schema usage data of your GraphQL operations to give you insights into your schema usage. In that way, we can identify if a schema change is safe to deploy or not.

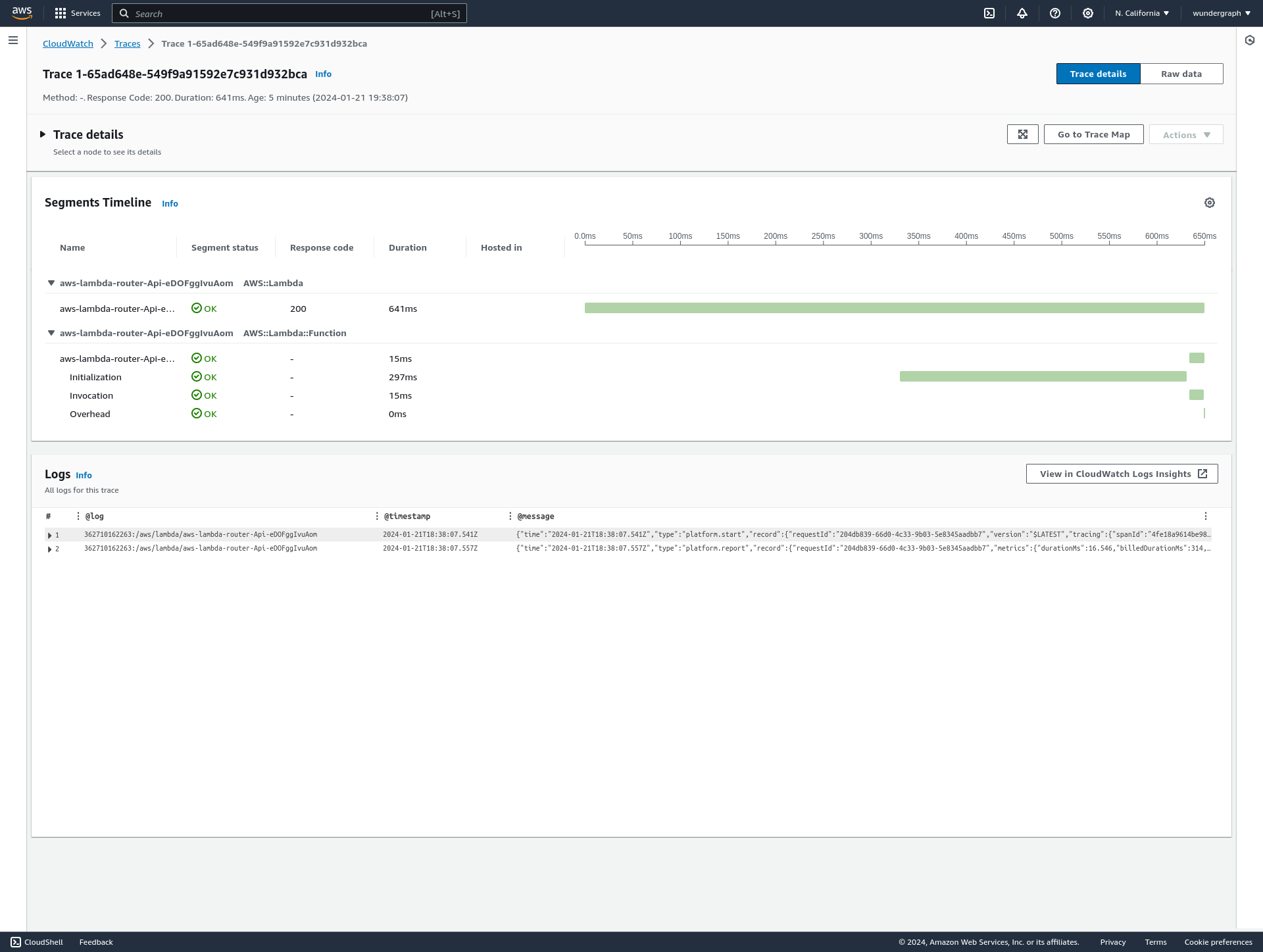

In the AWS Lambda handler, you can't do work after the response was sent. If you are thinking in servers, we looked for a way to do arbitrary work in the background and on SIGTERM #Issue . This does not fit into the serverless mindset. Maybe your function will never shutdown, so you have to find another way. We have to flush the metrics and traces synchronously after each request. This is tricky, because it adds latency to the response. It is much better than it sounds, because we optimized the flushing process to be as fast as possible. All metrics and traces are sent in parallel without write acknowledgement to the collectors and traces are sampled to reduce the overhead. This adds less than 75ms of latency to the response. This is a very small price to pay for the benefits you get.

Costs / Performance

AWS Lambda has a free tier of 1 million requests per month. If you exceed the free tier, you pay $2.28 per 1 million requests. We assume that each request takes 1 second to process. This plan uses the 128MB memory size model which is the cheapest and less powerful one. The CPU power scales proportionally with the memory size.

Our tests showed that the Router handles subsequent requests with this plan very well but at the cost of much higher cold start times. We recommend using the 1536MB memory size model. This is the sweet spot of good cold starts and low costs. We were able to wake up the Router and making a subgraph request in 641ms. The initialization time of the lambda function took just 300ms.

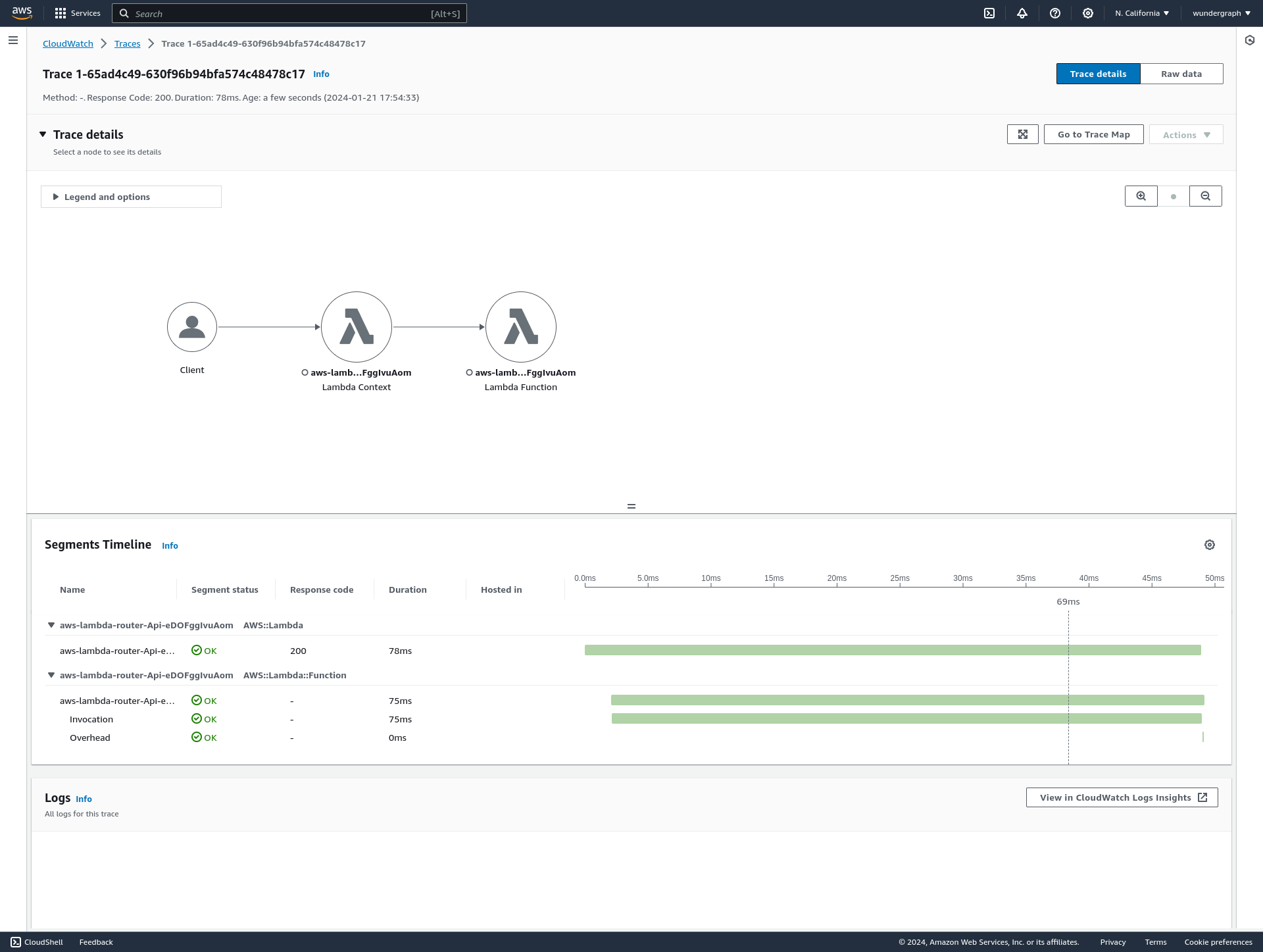

Once the Lambda function is initialized warm, it can handle subsequent requests in 80ms. In this scenario, we made a Subgraph request to the Employees API which is hosted on fly.io in the US West (LAX) region. This is a very good result and shows that our Router performs very well on AWS Lambda.

Benchmark

Additionally, we tested the performance of the router with 100 concurrent users over a period of 60 seconds. We warmed the functions with 2 pre-runs. Due to simplicity, I didn't redeploy the router near my location. Instead, I used the same setup as above. The router was deployed in the US West (Oregon) region while I'm located in Germany. We will definitely see better results if we deploy the router and subgraphs near my location, but I think the results already speak for themselves.

The router was able to handle all requests with p95 of 480ms. This is a very good result and shows that our router scales well on AWS Lambda.

I want to call out @codetalkio who does a fantastic job with their repository to unmistify the landscape of supported GraphQL Federated Gateways on AWS Lambda. It gave us the inspiration to support AWS Lambda as a first-class citizen.

Getting started

As you might know, we love Open Source! You can find the source code of the Serverless Router on GitHub . We manage separate releases for the AWS Lambda router. You can find the releases here .

You don't have to build the router yourself. You can download the latest release and follow the instructions below.

Download the Lambda Router binary from the official Router Releases page.

Create a .zip archive with the binary and the

router.jsonfile. You can download the latestrouter.jsonwithwgc federated-graph fetch. The .zip archive should look like this:Deploy the .zip archive to AWS Lambda. You can use SAM CLI or the AWS console. Alternatively, you can use your IaC tool of choice.

Conclusion

Using AWS Lambda, we made it ridiculously simple to deploy, operate and scale a GraphQL Federation Router / Gateway. We are very excited to see what you will build with it. We are looking forward to your feedback and contributions.

If you are a company and want to use our router in production, please reach out to us . We are happy to help you with the setup process and provide you with support.